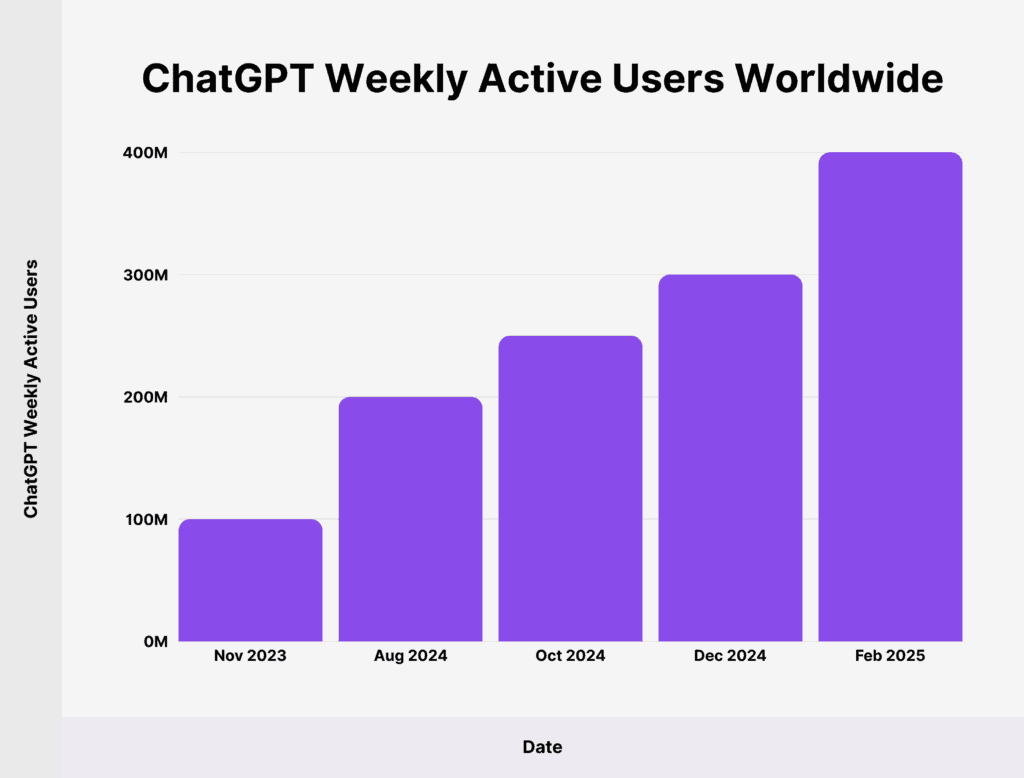

ChatGPT Users Beware: A landmark U.S. court decision has shaken the trust millions of users place in OpenAI’s ChatGPT. In May 2025, a federal judge ordered that OpenAI must preserve all user chat data—including conversations users believed were deleted. This ruling came during a high-stakes legal battle between The New York Times and OpenAI/Microsoft and has far-reaching implications for how AI tools like ChatGPT handle personal and sensitive data.

If you’ve ever typed something confidential into ChatGPT—whether it’s your next startup idea, a draft email, or a deeply personal question—this decision means that information may no longer be private. Let’s break it down: what happened, why it matters, who is affected, and—most importantly—what you can do to protect your data right now.

ChatGPT Users Beware

The court ruling requiring OpenAI to preserve all ChatGPT conversations—deleted or not—marks a pivotal moment in the history of digital privacy. It’s a wake-up call not just for AI companies, but for users, businesses, and institutions that rely on these tools every day. Until stronger legal safeguards are in place, the responsibility falls on users to understand their data exposure and act accordingly. Whether that means switching to a more secure plan, updating internal company policies, or simply being more cautious in your daily AI use, this is a turning point we can’t afford to ignore.

| Feature | Details |

|---|---|

| Case Name | New York Times v. OpenAI & Microsoft |

| Court | U.S. District Court, Southern District of New York |

| Judge | Magistrate Judge Ona T. Wang |

| Ruling Date | May 2025 |

| Ruling Summary | OpenAI must preserve all ChatGPT user chats, including deleted logs |

| Affected Users | ChatGPT Free, Plus, Pro, Team, and API users without Zero Data Retention |

| Unaffected Users | ChatGPT Enterprise, Edu, API with Zero Data Retention (ZDR) |

| Legal Impact | Data now subject to legal discovery in copyright litigation |

| Official OpenAI Page | OpenAI Help Center |

Timeline of Events

| Date | Event |

|---|---|

| Dec 2023 | The New York Times files lawsuit against OpenAI and Microsoft |

| Feb 2025 | Court begins data access hearings |

| May 2025 | Judge issues preservation order requiring OpenAI to store all user conversations |

| June 2025 | OpenAI files an appeal and proposes legal “AI privilege” |

What Happened? A Plain English Explanation

During ongoing litigation, The New York Times demanded access to output logs from ChatGPT to determine whether its copyrighted content had been used improperly. The court responded with a data preservation order, compelling OpenAI to store and preserve all user conversations—even those that had previously been deleted by the user or marked as temporary.

Essentially, the judge ruled that user chats could be treated as legal evidence—and must be saved until the case is resolved. The implications? Millions of users who believed they had control over their data are now caught in the middle of a corporate legal fight.

Why This Ruling Matters for Everyone?

Let’s be clear: this isn’t just about lawyers, tech companies, or AI researchers. This ruling affects everyday users, from college students using ChatGPT to write essays to HR teams using it to draft sensitive internal documents.

If you’re using ChatGPT on a:

- Free, Plus, Pro, or Team plan

- Or via the API without Zero Data Retention

Then your data—even your deleted chats—could be stored and examined for legal use.

OpenAI’s Response

OpenAI strongly opposes the ruling and argues it threatens user trust. CEO Sam Altman has publicly advocated for a new legal concept: “AI privilege”, similar to lawyer-client or doctor-patient confidentiality. The company is appealing the ruling while working to maintain compliance.

What Is “AI Privilege”?

“AI privilege” is a proposed legal protection that would classify your conversations with AI tools like ChatGPT as confidential—making them inadmissible in court or protected from third-party access. While not yet law, it reflects growing concern over how personal AI interactions are handled and protected.

Until such protections exist, however, your conversations with ChatGPT are legally vulnerable unless you’re using a product tier that guarantees privacy.

Implications for Businesses and Professionals

1. Legal Teams

Attorneys using AI to draft contracts or summarize client files should rethink their workflows. Anything typed into ChatGPT is now potentially discoverable in court—meaning a third party could request access.

2. Healthcare Providers

While most responsible professionals avoid putting Protected Health Information (PHI) into unsecured tools, some may use ChatGPT for brainstorming or non-identifiable use. Still, HIPAA violations are a risk if any patient data leaks.

3. Startups and Product Teams

Got a brilliant app idea or algorithm concept? If you share it with ChatGPT under a Free or Pro plan, it might be stored indefinitely—and exposed during litigation.

4. Educators and Researchers

Universities using ChatGPT for research may now need to adopt Enterprise or Edu plans to ensure conversations are not stored or discoverable.

Plan Comparison: What’s Safe and What’s Not

| Plan | Deleted Data Truly Gone? | Zero Data Retention Option | Best For |

|---|---|---|---|

| Free | No | No | Personal, casual users |

| Plus / Pro | No | No | Professionals, freelancers |

| Team | No | No | Small business teams |

| Enterprise | Yes | Yes | Businesses, legal/medical teams |

| Edu | Yes | Yes | Academic institutions |

| API (No ZDR) | No | No | General developers |

| API (ZDR Enabled) | Yes | Yes | High-security app integrations |

Pro tip: If data privacy is critical to your work, use ChatGPT Enterprise or API with ZDR. These plans give you actual control over data retention and deletion.

Historical Comparison: This Isn’t the First Time

This case echoes earlier tech battles over privacy:

- Apple v. FBI (2016): Apple refused to unlock iPhones, citing user privacy.

- Google’s Search Logs (2006): A U.S. judge demanded search data for analysis, sparking outrage.

Now, in 2025, we’re seeing a similar crossroads—this time with conversational AI. The legal system is still catching up to the technology, and users are caught in the middle.

What Can You Do Right Now?

Here’s a simple action plan for individuals and companies:

Step 1: Know Which Plan You’re On

Go to your OpenAI account dashboard and check whether you’re using a privacy-friendly plan. If not, consider upgrading.

Step 2: Don’t Share Sensitive Information

Until legal protections are in place, avoid sharing:

- Passwords

- Financial data

- Legal case notes

- Trade secrets

- Personal health or identity info

Step 3: Create an Internal Policy for AI Use

If you’re managing a team or business, write an official policy that includes:

- Which tools are allowed

- What data can be input

- Who has access to Enterprise-level tools

Step 4: Monitor the Legal Landscape

The final outcome of OpenAI’s appeal could reverse or expand this ruling. Stay informed by subscribing to trusted tech and legal newsletters, such as:

- Lawfare

- Electronic Frontier Foundation

- OpenAI Newsroom

New AI Model Caught Leaving Notes for Itself: Check Why This Has Experts Concerned

AI Could Destroy Software Jobs Warns Zoho CEO: Check What He’s Predicting!

How AI is Revolutionizing the Classroom: Key Developments in EdTech